In her 46th year of teaching, Sharon Klein remembers the first computers incorporated into her curriculum and the introduction of the internet.

Now, artificial intelligence (AI) is streaming into her classroom.

When the English teacher first encountered ChatGPT in 2023, “cheating” was her first thought, but she has changed her mind.

“When the internet came out, everybody thought we’d all stop thinking. And when AI came out, everybody thought students would start cheating. Now, we’re told to integrate it into our classroom and not look at it as something negative, but rather to enhance our personal teaching,” Klein says.

ChatGPT was launched in November 2022 by OpenAI, a company co-founded by North Dakota native Greg Brockman.

The AI chatbot allows users to type a phrase or question, similar to an internet search engine. Then, it generates “human-like text” in seconds using information amassed from the web.

It is among several AI platforms firmly at the fingertips of students and teachers.

“It’s inevitable, it’s exciting and it also makes me a little nervous,” N.D. Superintendent of Public Instruction Levi Bachmeier says about AI.

“It’s completely changing education,” says William Grube, who holds a computer science degree from North Dakota State University and launched Gruvy Education to deliver AI training to schools across the nation.

“They’re moving away from basic papers, moving away from basic homework that we’ve been seeing for decades upon decades upon decades, and it’s really bringing education into the 21st century,” Grube says.

AI arms race

Educator-facing AI will be a powerful tool for teachers, but a balanced approach must be taken with student-facing AI, Bachmeier says.

Klein agrees. She teaches juniors and seniors at Grant County High School in Elgin and was a Bismarck State College adjunct English lecturer for 16 years, remotely teaching college credit courses to high school students.

She introduces AI to her students, warns them about plagiarism, then tries to find the best AI applications in her classroom, such as fine-tuning an idea.

“That’s where the magic comes in is them understanding how to communicate with a large language model like ChatGPT in a way that gives them a response that is very useful,” Grube says.

“It’s not infallible, and I try to show them that, too,” Klein says, pointing out AI chatbots are not intelligently sorting facts from falsehoods, but simply gathering information from cyberspace.

As Bachmeier visits schools across the state, he hopes to learn more about how AI is helping students learn as well as strategies to ensure AI is not circumventing the learning process, creating more work for teachers or jeopardizing student safety.

In North Dakota schools, Grube estimates 30% of the staff are comfortable with the tools. Among the other 70%, teachers either have not had the time to fully explore AI or they’re simply avoiding it.

Over 92% of students already use AI tools regularly, and every student will use AI in their career, Grube says. ChatGPT is still the most prevalent tool in the classroom.

“Students are exploring with AI on their own. There are huge opportunities to support learning. There are also academic integrity considerations that have to be made,” Bachmeier says.

Teachers must be more mindful and vigilant about how students are using AI, Klein says.

“As a teacher, it’s not any different than plagiarism,” Klein says. “You know it wasn’t their voice and they were using vocabulary that wasn’t unique to them or something they would never say in their own discussion.”

Grube describes it as an arms race between education and anti-cheating technology.

“There’s no magical tool that’s going to show a teacher if the student used AI or not. Students are very, very tech savvy. They’ve had devices in their hands since they were very young,” Grube says.

He encourages teachers to consider how curriculum is provided to “make it more impossible to cheat” using AI and guide students to continue to critically think and problem-solve.

For example, a math teacher could have students measure a block and figure out how many blocks would fill the classroom, rather than having them solve a written word problem, which could be easily inputted into an AI tool for an answer.

“These are more like local environment real-world questions instead of just a word problem or a diagram on a piece of paper,” Grube says.

Changing the classroom

“When it comes to educator-facing AI, I think there’s a lot of opportunities to help streamline all of the administrative work that we ask teachers to do,” Bachmeier says.

Writing policies and developing curriculum, lesson plans or worksheets could all be streamlined with AI.

“It’s an extension. It’s a helpful tool. I can see where novice teachers may depend upon it more than a veteran teacher just to get started, but again, you have to be careful. You have to always go back and doublecheck,” Klein says.

Teachers can use AI to create materials to tailor the classroom for each specific student or group students based on outcome data, Grube says.

“In five years, I think we’re going to see a lot more personalized instruction,” Grube says. He envisions automated worksheets generated based on the student’s level, with a grade book generating the next worksheet or the next lesson plan.

Teachers will then focus on the delivery and connection with the students, he says.

“OK, I have a student that is at the third-grade reading level and I have a student that’s at the 10th-grade reading level. How do I tailor my curriculum to both of those students while I additionally have 22 other students to teach at the same time? So, it’s really going to automate a lot of that for them, so they can focus more on the actual delivery of that information and more on genuine help with the students,” Grube says.

“It’s just like the graphing calculator was years ago. We didn’t replace our math teachers. It enabled them to push students in new and different ways. Similarly, this should be an aid to teachers doing great work in the classroom, not a replacement,” Bachmeier says.

Technology at full throttle

AI has rapidly advanced in four years, graduating from a 10th-grade level of accuracy to being more advanced than a doctorate degree, Grube says.

“It’s like the mind got smarter behind it,” Klein says. “It really can go in-depth, whereas before it was more skimming the surface. It’s very detailed.”

While the N.D. Department of Public Instruction issued AI guidance a couple years ago, those guardrails need to be updated to help local schools discern the best use for AI, Bachmeier says.

“We all agree that probably the time is right to bring a group of educators back together … and figure out how can we thread the needle in providing guidance that is both helpful, but also relevant, because if anything gets too specific or granular, it will become outdated the moment it is printed on paper, just given how rapidly evolving this industry is, so it’s definitely a challenge,” he says.

As a teacher, Klein worries less about how students are using AI for classwork and more about how they might be using it for social interaction.

“We can only monitor it in school. And when they get in their bedrooms at home and they have a friend who is AI-generated, we can’t,” Klein says. “You can’t pick your children’s friends, but I think this one is more dangerous.”

“One of the biggest things is people just not thinking for themselves, and not being able to have their own unique thoughts, their own independent opinions. Over a billion people use a tool like ChatGPT or Gemini every single week. That’s one in eight people worldwide. And if they’re just blindly using the tools and just believing everything that comes out of the tools, we can see how information control can become a massive, massive issue,” Grube says.

Despite these concerns, AI is here to stay, Grube says.

“There is zero chance that these tools are going away. Yes, we can get mad that they exist,” he says. “Given that the tools aren’t going away and are only going to keep getting better, what can we do to make sure that we’re capitalizing on the positives, while mitigating the risks?”

“AI is not inherently good, it’s not inherently bad,” Bachmeier says. “It is a tool, and so, we need to help districts … be aware of both the opportunities and the potential pitfalls that come along with this tool.”

___

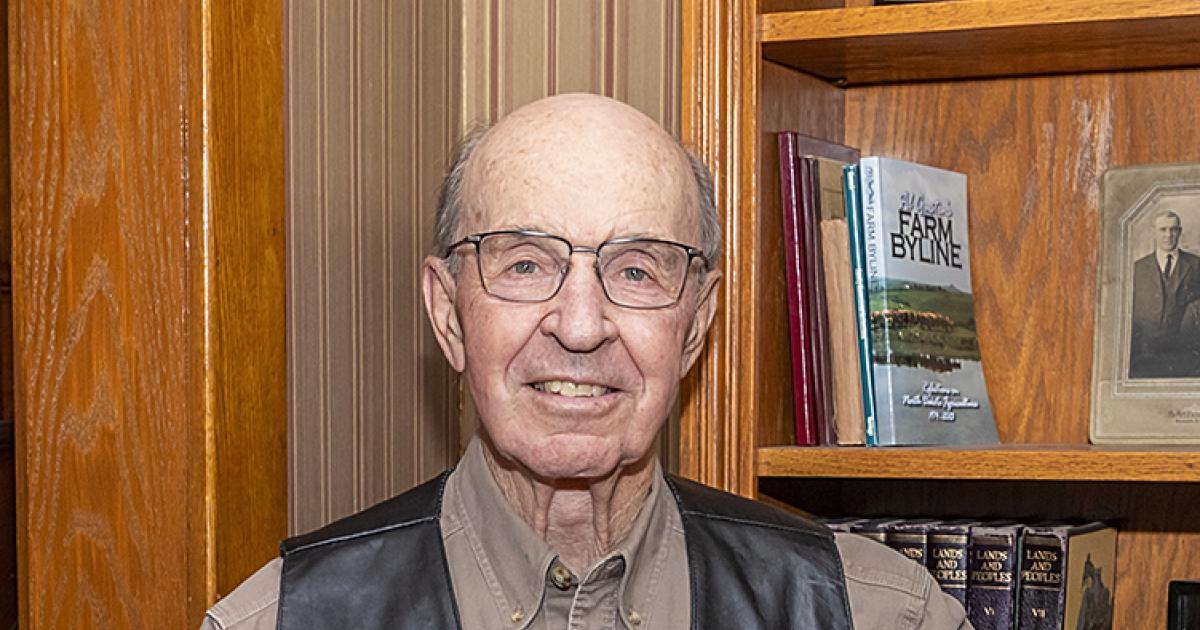

Luann Dart is a freelance writer and editor who lives in the Elgin area.